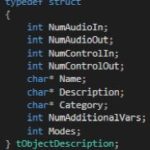

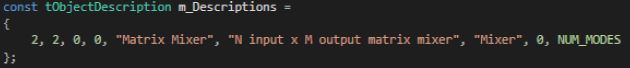

Below are the features that most developers will need during their audio object development. The order the methods get called during initialization may be helpful to audio object developers.

Constructor

The Audio object constructor is called by the framework. Audio Object developers should use the constructors to initialize their classes to a suitable state. No member variables and pointers should be un-initialized.

CAudioObject::CAudioObject()

The constructor initializes various member variables.

GetSize

This method is an abstract method in the base class so it MUST be implemented by every audio object. This simply returns the class size of the given audio object.

/**

* Returns the size of an audio object

*/

virtual unsigned int getSize() const = 0;

Init

This function initializes all internal variables and parameters. It is called by the framework (CAudioProcessing class). In this method, the object should initialize all its memory to appropriate values that match the device description specified in the toolbox methods (getXmlFileInfo, etc)

void CAudioObject::init()

{

}

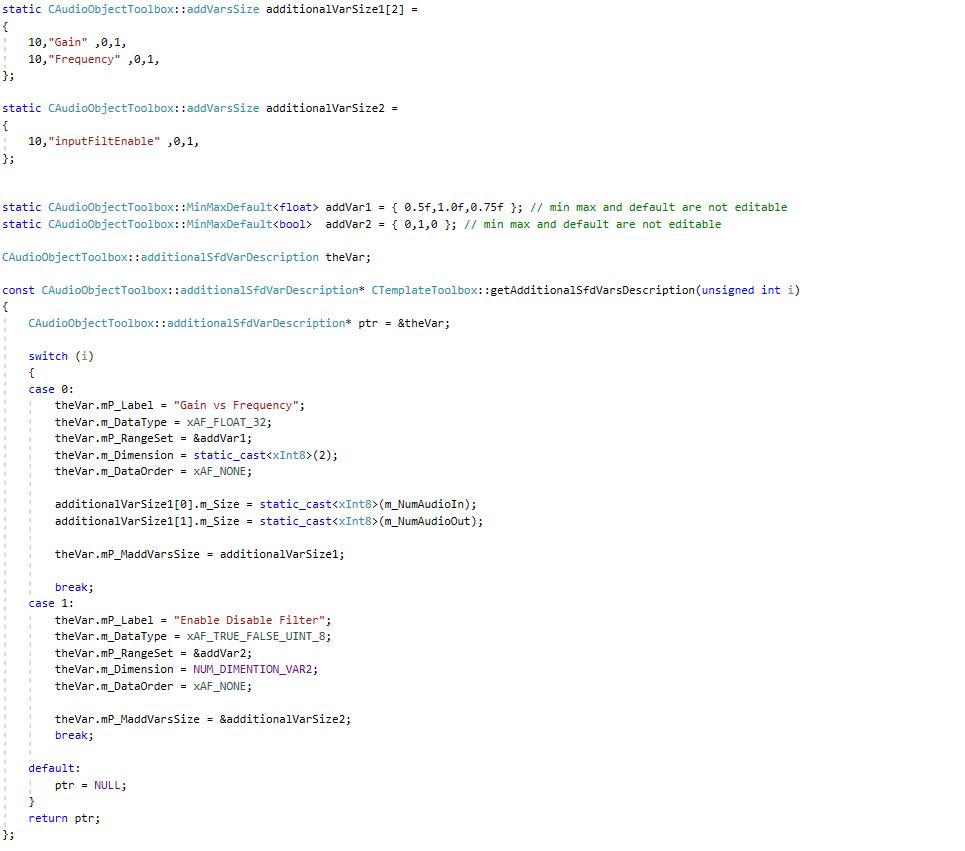

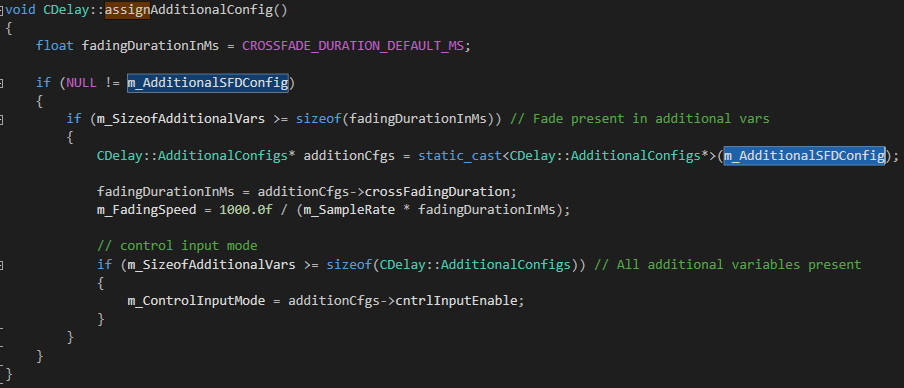

If the audio object requires additional configuration variables, then the user must also implement the method to initialize that data via assignAdditionaConfig(). For more details, refer Audio Object Additional Configuration.

If this method is not overwritten, it is an empty method that will not do anything.

Calc

The calc() function executes the audio object’s audio algorithm every time an audio interrupt is received. This function is called when the audio object is in “NORMAL” processing state only. This function takes pointers to input and output audio streams and is called by the CAudioProcessing class when an audio interrupt is received.

The audio inputs and outputs are currently placed in two different buffers for out of place computation. xAF expects audio samples stored in input buffers to be processed and stored in output buffers.

void CAudioObject::calc(xAFAudio** inputs, xAFAudio** outputs)

{

}

If the object/algorithm does not have any logic to execute during an audio interrupt, this method does not need to be overridden. By default, it is empty. For example, some control objects do not need to execute any logic during an interrupt and, accordingly, they do not override this method.

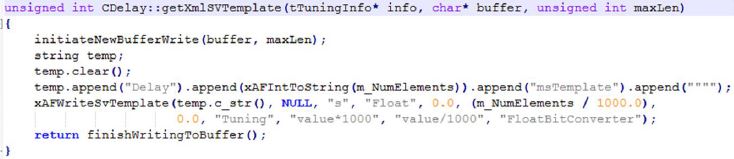

TuneXTP

This functionality alerts an object when its parameter memory is updated or modified. It provides information on the exact variables that were modified and allows the object to update internal variables accordingly. An example is in a filter block, where a parameter update (for example a gain/frequency/q change) triggers the recalculation of the filter coefficients in the tuning code. The API triggered is CAudioObject::tuneXTP().

void CAudioObject::tuneXTP(int subblock, int startMemBytes, int sizeBytes, xBool shouldAttemptRamp)

{

}

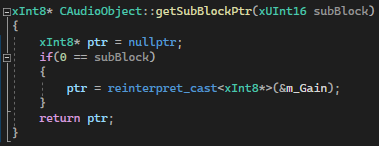

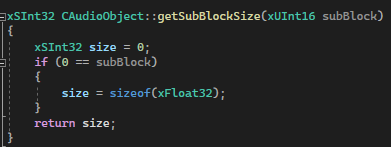

An ID-based memory addressing scheme is used instead of pure memory offsets. The CAudioObject→tuneXTP() method is called by the framework in CAudioProcessing→setAudioObjectTuning().

CAudioObject::tuneXTP() take in four integers representing the:

- sub-block: index of the sub-block in the audio object.

- startMemBytes: memory offset (in bytes) from the beginning of the sub-block memory.

- sizeBytes: number of the parameters tuned (size in bytes).

- shouldAttemptRamp: flag to indicate audio object should attempt to ramp the tuning parameters or not.

The subblock, startAdr and size are passed into the audio object to enable the calculation of the elements it needs to tune.

Implementation

Once triggered, the function’s implementation is heavily dependent on the audio object itself and on the corresponding tuning panel (or Device Description/ memory layout) that the tuning tool will use to tune the audio object.

Inside this method tuneXTP(), the received tuning data is NOT checked for valid range limits.

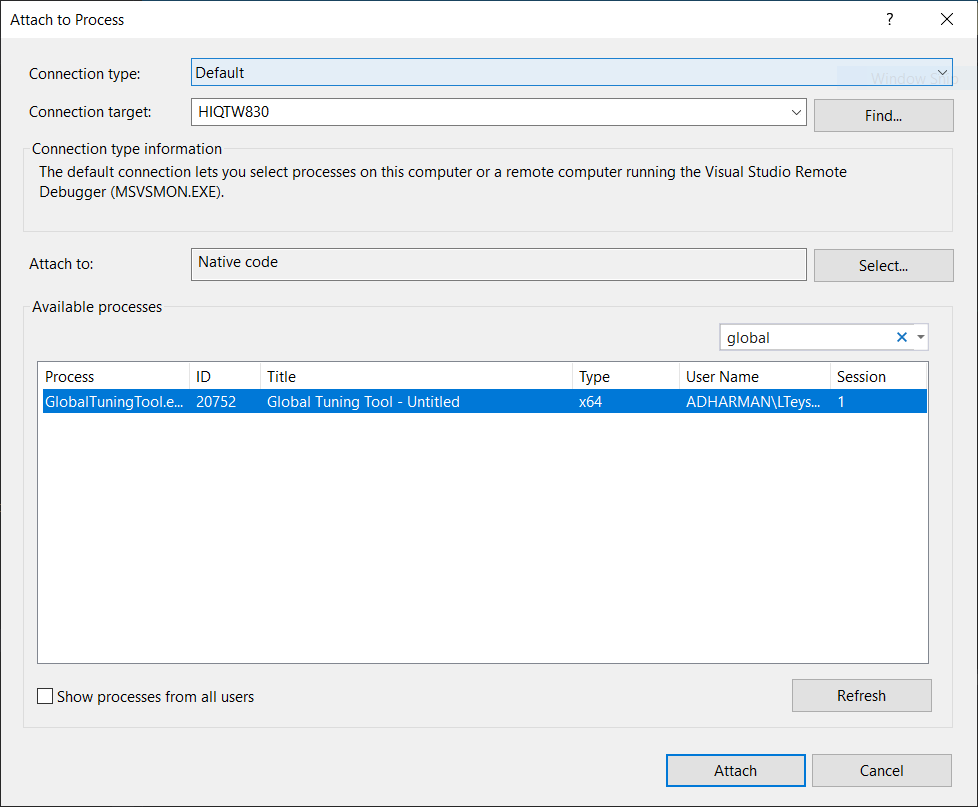

– If the Audio Object (AO) is tuned from GTT, the GTT ensures that the tuning parameters remain within the range prescribed by the toolbox methods.

– If the Audio Object (AO) is tuned using methods other than GTT, like SVE or xTP tuning, it is the responsibility of those entities to ensure that the tuning parameter values remain within the valid range as mentioned in the Audio Object Description User Guide.

The developer needs to take into consideration the following while designing an object:

- The set file header size. If an object uses a large number of sub-blocks, each sub-block will require additional header data to store its values in the initial tuning file.

- The number of sub-blocks. If there are a lot of sub-blocks, and developers are tuning a big chunk of data, there will be many more xTP messages sent compared to an audio block with fewer sub-blocks.

- The calculations required. Having more sub-blocks can reduce the calculations required in the AudioObject::tuneXTP() functions. The audio object can focus on calculations for a narrower section of audio object memory, which is the sub-block.

For example, if a parameter biquad block is being tuned, the index or sub-block ID passed in by the CAudioProcessing class could determine:

- If a specific channel is being tuned. Once triggered, the function should:

- Recalculate the filter coefficients characterizing all filters of that specific Biquad channel.

- Use the memory offset and size variables to recalculate only the filter coefficients whose corresponding parameters were modified.

- A specific filter within a specific channel. Once triggered, this will only compute the coefficients of a single filter.

The xAF team has implemented the Parameter biquad block with the sub-block definition referring to a channel in the biquad block rather than one specific filter. The xAF also uses memory offset and sub-block to recalculate as few filter coefficients as possible.

Examples of what the tuning methods of each individual Audio Object trigger are listed below:

| Audio Object |

Description |

| Delay |

Sets the delay time in milliseconds. Each channel may have different delay and update buffers. |

| Gain |

Sets the gain of each channel. |

| Parameter Biquad |

Sets the type, frequency, gain, quality of a filter and recalculate filter coefficients for all filters in a channel. |

| Limiter |

Sets the limit gain, threshold, attach time, release time, hold time and hold threshold. |

| LevelMonitor |

Sets the frequency and time weighting of level meter. |

Control

Audio object control is carried out through CAudioObject→controlSet(). When a control message is received, it triggers the CAudioProcessing→setControlParameter(..) function, which in turn triggers the Control Input object control function, ControlIn→controlSet(..).

This then routes the control from the control input object to each object intended to receive the message through the audio object controlSet() method.

virtual int controlSet(int pin, float val); where pin: is the input control pin index being updated val: the new value the control pin is being updated with

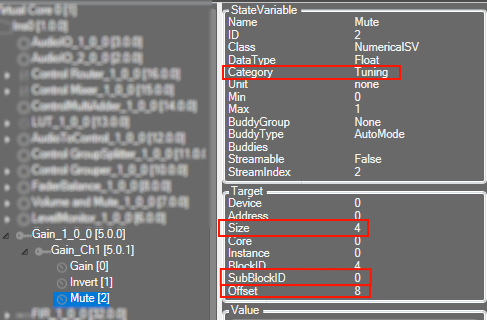

Objects that can be modified by control variables like volume, need to configure their object class control input and output values. These control I/Os are determined during the design of the audio object and can depend on the user configuration of the object during the signal design stage.

For example, a volume audio object will have multiple audio input and output channels. It will also have an input control that receives volume changes from the HU and an output control that relays those changes to other objects that depend on volume control.

These pins are a one to one connection between objects. If, at the output of the volume object, the volume control needs to be forwarded to multiple audio objects, the signal designer must add a control relay (ControlMath->splitter) object that can route those signals to multiple objects.

The implementation of this function is specific to the audio object. For example, a volume audio object control command will fade the volume value in the audio object starting from the old gain value and ending with the new gain value sent through the control command.

An object can also pass control to another object connected to its control output via the following CAudioObject→setControlOut(..) method. This method identifies which object should receive the output and passes the value to it.

/*!

* Helper method for writing control to mapped outputs

* param index - which of the object's control outputs we are writing to

* param value - value we are writing out to the control output

*/

void setControlOut(int index, float value);

To be able to externally read the value of a control variable from an audio object, that data must be placed in the state memory of the object and described in the description file used by the GTT tool. This is done through the Device Description section.

Finally, objects that do not interact with any controls do not need to override this method.

controlSet MUST NOT write to another objects memory, if that memory is part of a larger block of memory that must be changes altogether. For example, controlSet must not write into memory containing filter coefficients because changing one coefficient alone is bound to break the filter behavior