Audio Object Developer Guide

- Purpose of this Document

- Overview

- Audio Object Configuration

- Basic Features and APIs

- Advanced Features and APIs

- Audio object Example Object

- General Guidelines

- Adding External AO into AudioworX Package

- Building External Audio Object

Starter Kit Developer Guide

- Overview

- Assembling and Configuring Starter Kit Components

- Setting Up the Developer Environment

- SKUtility Developer Options

- Build AWX External Object

- Running Debug Session

xAF Integration User Guide

Troubleshooting

3.2.1.Audio Object Memory

- Audio Object Developer Guide

- Audio Object Memory

This section contains following topics.

- API

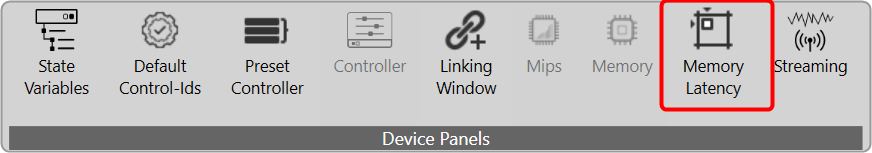

- Memory Configuration on GTT

- Memory allocation in framework

- Audio Object Memory Declaration and Usage

The below API is used to fill memory records according to given target and data format:

xUInt8 getMemRecords(xAF_memRec* memTable, xAF_memRec& scratchRecord, xInt8 target, xInt8 format);

- getMemRecords() is called by the GTT when it needs to know how many memory records each object contains or requires and their type, latency and size which depends on target and data format.

- The memory record sizes could be dependent on these object variables: m_NumElements, m_NumAudioIn, m_NumAudioOut, BlockLength, SampleRate, additional configuration data, etc

By default, the getMemRecords() method will return zero number of records and doesn’t fill the provided memTable if your object does not require any dynamic memory, you do not need to override these this method.

In the SFD when AudioObject is drag into panel, GTT will call getMemRecords() API to collect memory records details from the object to update the memory latency table. This will be repeated for all the AO’s of the SFD. Once the design is complete it shall be saved.

The user can open the memory latency editor and edit the latency levels. After saving the latency levels, if the object properties were edited and saved, the user will loose the saved latency levels for that object because GTT will call getMemRecords() again that will reset to default latency levels.

Scratch memory does not need to be allocated for each audio object since all the audio objects can use the same scratch memory. The GTT calculates the maximum scratch memory, maximum alignment and minimum latency requested by the audio objects, and put it into AudioProcessing chunk.

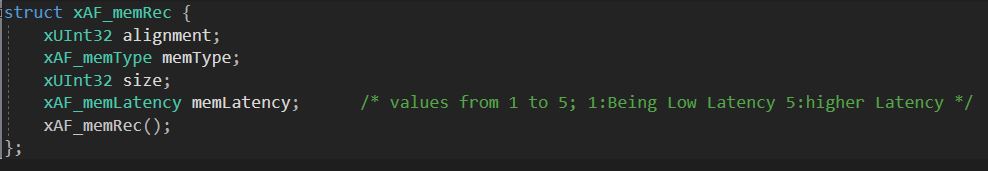

The elements of this table must be of the data type xAF_MemRec, which is shown below:

| Member | Description |

| alignment | This is the required alignment for the memory region. |

| memType | Type of the memory to be allocated – memType could be:

|

| size | This is the size of the memory to be allocated. |

| memLatency | This can take values from one to five where one – low latency and five – high latency. |

While sending the signal flow to the target device the memory records details will be send as part of audio processing chunk and audio object chunk in the signal flow write command.

Memory allocation in framework

The memory records are part of flash file so the platform needs to parse the flash file using DSFD parser that would give audio processing properties and audio object properties structures.

The platform needs to register the platform dependent memory allocator and deallocator in the constructor CAudioProcessing::CAudioProcessing().

Now call the CAudioProcessing::initFramework() pass the audio processing and audio properties structures, which will allocate the requested memory using platform dependent allocator and calls CAudioObject::setRecordPointers() (it will set m_MemRecPtrs) and CAudioObject::init() API’s for every objects.

Audio Object Memory Declaration and Usage

There are two types of memory used when allocating memory for audio objects.

- Scratch Memory: Scratch memory is non persistent memory that is used by an audio object only during an audio interrupt. Data in the scratch memory is not guaranteed to remain unchanged from one audio interrupt to the next. Up to and including Deep Purple, each object is restricted to one memory record. Developers can determine the size, alignment and latency level required.

- Coefficient Memory: Coefficient memory is any other type of persistent memory that is required by the audio object. There is no limit to the number of records developers can create. Developers can configure the size, alignment and latency levels required for each record and they can be different for every record.

Audio Buffers: The audio buffers provided in the calc function are intended to be read and write only, i.e. they should not be used for intermediate calculations. If intermediate buffers are required, scratch memory must be requested. The reason for this is that it is not guaranteed that the buffer per channel will be unique for unconnected pins. For unconnected pins on the input side, xAF provides a single buffer (filled with zeros) that is shared by all audio objects in an xAF instance. It is expensive (MIPS-wise) to clear this buffer each time, so it is important not to write data to this buffer and leave it untouched, as it will be read by all audio objects with unconnected pins. For unconnected output pins, xAF allocates a single “dummy” buffer for all unconnected pins, rather than a unique buffer per channel.

For audio objects that support in-place processing and all pins are connected, xAF will assign the same input and output buffer per channel, but if there are unconnected pins, the input and output buffers will be different, so the audio object developer should not blindly rely on the input and output buffers being the same or different. It is highly recommended to implement proper checks if the algorithm requires any of the above input/output buffer constellations.