Audio Object Developer Guide

- Purpose of this Document

- Overview

- Audio Object Configuration

- Basic Features and APIs

- Advanced Features and APIs

- Audio object Examples

- General Guidelines

- Adding External AO into AudioworX Package

- Building External Audio Object

Starter Kit Developer Guide

- Overview

- Setting Up the Developer Environment

- SKUtility Developer Options

- Build AWX External Object

- Running Debug Session

xAF Integration User Guide

Troubleshooting

- Audio Object Developer Guide

- Metadata

3.1.1.Metadata

The Metadata is design-time information of the audio objects use to describe their features and attributes. Metadata is stored in the audio object code. This information can be used to convey memory usage or to check compatibility between the audio objects.

It also provides the tool with constraints on parameters, and information describing controls and audio channels.

There are three types of metadata:

- Dynamic: The dynamic accepts numerous configuration parameters and provides data specific to those parameters.

- Static: The static data is constant, it does not take in any parameters.

- Real-time: The real-time metadata specific to a connected target device.

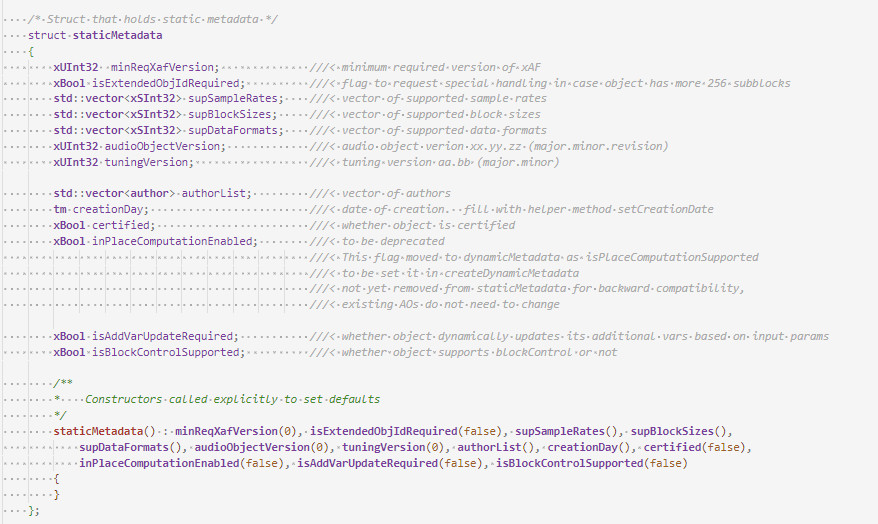

The Static Metadata represents data that will not change based on configuration. It is provided as is.

There are two API methods related to this feature.

- createStaticMetadata

- getStaticMetadata

virtual void createStaticMetadata();

staticMetadata getStaticMetadata() { return m_StaticMetadata; }

Create Static Metadata

This method is intended to be overwritten by each instance of AudioObject. The goal is to populate the protected member m_StaticMetadata. There is an example of how to do this in AudioObject.cpp. The basic audio objects included within xAF also implement this method appropriately.

This method should be overridden by any object updating to the new API. Here are the relevant details:

- minReqXafVersion – set this to an integer which is related to the major version of xAF. (ACDC == 1, Beatles == 2, etc)

- isExtendedObjIdRequired – false for most objects. This flag enables support for more than 256 subblocks.

- supSampleRates – list of all supported samples rates (leave blank if there are no restrictions)

- supBlockSizes – list of all supported block sizes (leave blank if there are no restrictions)

- supDataFormats – list of supported calcObject data formats (leave blank if there are no restrictions)

- audioObjectVersion – Condensed three part version number. Created with helper method :

- void setAudioObjectVersion(unsigned char major, unsigned char minor, unsigned char revision)

- It is up to audio object to determine how to manage these versions.

- tuningVersion – Condensed two part version number. Created with helper method :

- Void setTuningVersion(unsigned char major, unsigned char minor)

- These version numbers must only be changed when appropriate!!

- Follow these rules:

- Increment minor version when new release has *additional* tuning but previous tuning data can still be loaded successfully.

- Increment major reversion when the new release is not compatible at all with previous tuoid setTuning data.

- If tuning structure does not change, do not change this version

- authorList – fill with list of authors if desired

- creationDay – date of creation for the object

- certified – whether this object has undergone certification

- inPlaceComputationEnabled – whether this object requires input and output buffers to be the same (see below)

- isAddVarUpdateRequired – whether the tuning tool should assume additional vars can change any time main object parameters are updated.

- Set this to true if your additional variable sizes are based in some way on inputs.

- Example: Number of Input channels is configurable by the user – and the first additional variable size is always equal to the number of input channels.

Get Static Metadata

This method simply returns a copy of m_StaticMetadata. It is not virtual.

In-Place Computation

This will be deprecated here and moved to dynamic metadata, to support a target/core specific implementation. The static member will be kept for backward compatibility reasons only and will be deprecated after some time.

Do not use this struct member in future implementations!

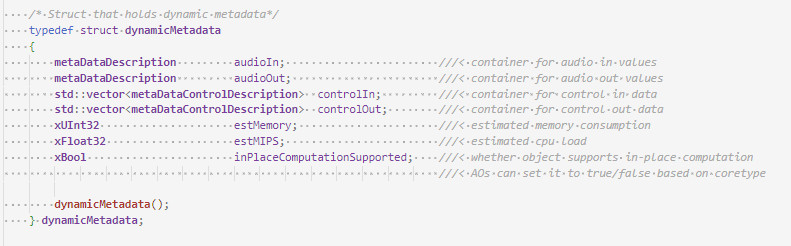

Dynamic metadata creation is similiar to static, but accepts arguments for the creation process, hence the dynamic. The object receives all configuration data being considered (in most cases this would be by GTT) and writes relevant information to the member m_DynamicMetadata in response.

virtual void createDynamicMetadata(ioObjectConfigInput& configIn, ioObjectConfigOutput& configOut);

dynamicMetadata getDynamicMetadata() { return m_DynamicMetadata; }

Create Dynamic Metadata

createDynamicMetadata is called after a successful call to getObjectIo. It can further restrict values in the ioObjectConfigOutput struct. All required information is passed in with configIn.

- audioIn & audioOut – instances of type metaDataDescription which label and set restrictions for inputs and outputs. Label need not be specified if a generic label will suffice. (eg: Input 1) If not, supply a label for each input and output.

- controlIn & controlOut – are vectors of type metaDataControlDescription which label and specify value ranges for each control input. The number of controls is dictated by other. parameters, so we don’t have to bound the min and max. Note: Min and Max are not enforced, they are only informative to the user.

- estMemory – Estimated memory consumption for the current configuration (in bytes).

- estMIPS – estimated consumption of processor (in millions of cycles per second, so not really MIPS).

Get Dynamic Metadata

This method simply returns a copy of m_DynamicMetadata. Note that it is not virtual.

Description of Structures

These structures are used by the tool during signal design. The input configuration struct holds all *attempted* parameters, the output struct is used to constrain audio inputs and outputs and report the correct number of control inputs and outputs.

In-Place Computation

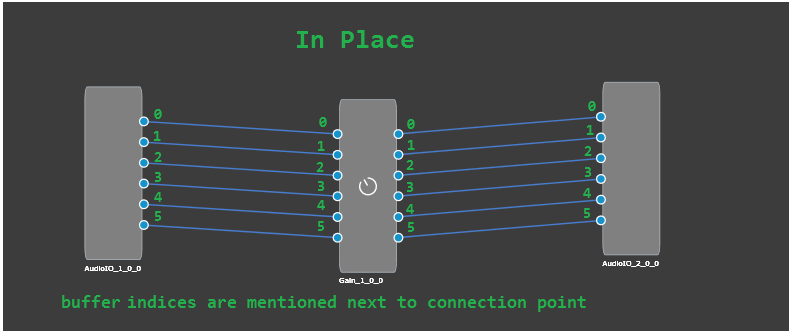

This feature allows Audio Objects to give the GTT the ability to operate in in-place computation mode. In this mode, the audio object uses the same buffers for input and output. This option has been moved from static metadata to dynamic metadata to support a kernel-based decision. This allows the AO developer to decide, based on the target architecture, whether or not it is beneficial to run the calc function in-place.

GTT analyses the signal flow and calculates the amount of buffers required for the given signal flow. If isInplaceComputationSupported is set by the audio object developer, GTT tells the framework to allocate only input buffers only.

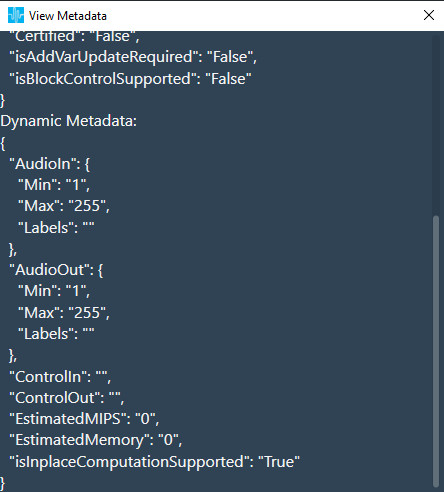

The isInplaceComputationSupported flag can be checked in the the audio object’s dynamic metadata.

For example, Gain configured for 6 channels :

- If isInplaceComputationSupported is not set, it will use a total of 12 buffers.

- If isInplaceComputationSupported is set, it will use a total of 6 buffers.

In-place computation feature has the following benefits:

- reduces flash size.

- reduces number of IO streams which improves the memory performance on embedded.

Current Limitation or Additional conditions

An Audio Object Marked is considered for in-place has to satisfy following three conditions:

- Audio Object should have dynamic metadata flag isInplaceComputationSupported set to true for the selected core type.

- Audio Object should have equal number of input and output pins.

- All audio pins should be connected.